Hi, DevOps fans. Welcome to series of articles devotes to AWS Fargate, autoscaling and terraform. I assume that you have read 4 parts at how to deploy web application at AWS Fargate using terraform and, as a result, you are aware of all terrafrom code related with that.

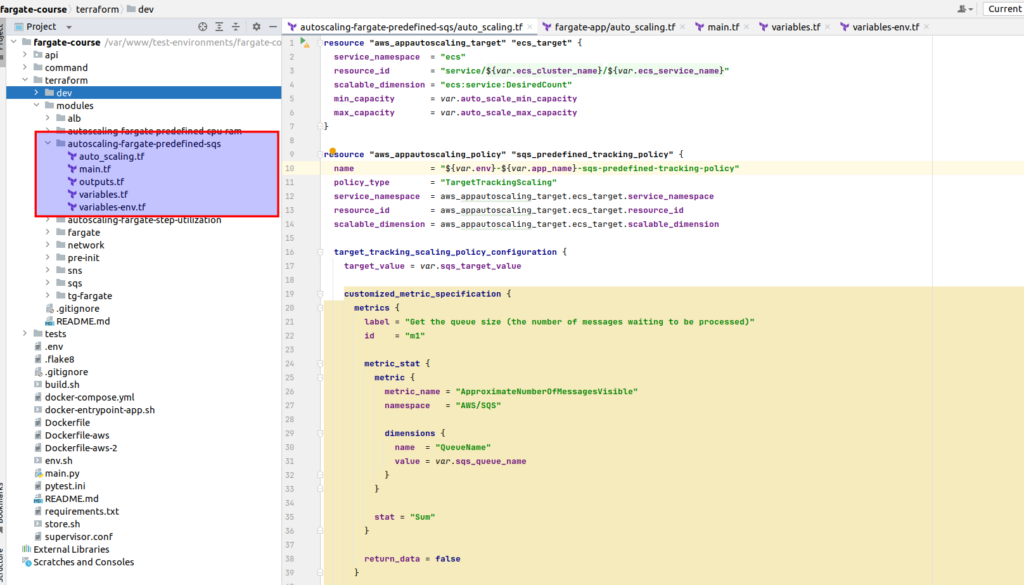

At that tutorial I will show you how to set up autoscaling with a predefined custom SQS CloudWatch metric. For that purpose we will use terraform module. As always we will start from physical file structure:

Here is the code for auto_scaling.tf file:

resource "aws_appautoscaling_target" "ecs_target" {

service_namespace = "ecs"

resource_id = "service/${var.ecs_cluster_name}/${var.ecs_service_name}"

scalable_dimension = "ecs:service:DesiredCount"

min_capacity = var.auto_scale_min_capacity

max_capacity = var.auto_scale_max_capacity

}

resource "aws_appautoscaling_policy" "sqs_predefined_tracking_policy" {

name = "${var.env}-${var.app_name}-sqs-predefined-tracking-policy"

policy_type = "TargetTrackingScaling"

service_namespace = aws_appautoscaling_target.ecs_target.service_namespace

resource_id = aws_appautoscaling_target.ecs_target.resource_id

scalable_dimension = aws_appautoscaling_target.ecs_target.scalable_dimension

target_tracking_scaling_policy_configuration {

target_value = var.sqs_target_value

customized_metric_specification {

metrics {

label = "Get the queue size (the number of messages waiting to be processed)"

id = "m1"

metric_stat {

metric {

metric_name = "ApproximateNumberOfMessagesVisible"

namespace = "AWS/SQS"

dimensions {

name = "QueueName"

value = var.sqs_queue_name

}

}

stat = "Sum"

}

return_data = false

}

metrics {

label = "Get the ECS running task count (the number of currently running tasks)"

id = "m2"

metric_stat {

metric {

metric_name = "RunningTaskCount"

namespace = "ECS/ContainerInsights"

dimensions {

name = "ClusterName"

value = var.ecs_cluster_name

}

dimensions {

name = "ServiceName"

value = var.ecs_service_name

}

}

stat = "Average"

}

return_data = false

}

metrics {

label = "Calculate the backlog per instance"

id = "e1"

expression = "m1 / m2"

return_data = true

}

}

}

}At the top we register an autoscaling target – it is completely the same as what we had at tracking policy, so there is nothing to discuss here. What you have indeed to concentrate your attention at – it is the target_tracking_scaling_policy_configuration. That is the most interesting thing where a customized_metric_specification is created. Current metric is called backlog per instance and it is built upon a simple math expression – > m1/m2.

Now we need to understand what m1 is – it is the sum of jobs that are sent to the queue. The AWS predefined metric that allows us to get according data is called ApproximateNumberOfMessagesVisible

M2 – it is the number of running ecs tasks at our cluster. So, in fact backlog per instance – it is ratio between job’s sums at queue and number of running ecs tasks.

And it is not something invented by me. Here are some info related with current theme: documentation -> link. Current articles are oriented at EC2, so some extrapolation at ECS is required. Anyway it explains the essence rather well. The 1st question that reasonably appears: Why we need such a complicated thing, can’t we use simly ApproximateNumberOfMessagesVisible as autoscling metric? And here is an answer:

“The issue with using a CloudWatch Amazon SQS metric like ApproximateNumberOfMessagesVisible for target tracking is that the number of messages in the queue might not change proportionally to the size of the Auto Scaling group that processes messages from the queue. That’s because the number of messages in your SQS queue does not solely define the number of instances needed. The number of instances in your Auto Scaling group can be driven by multiple factors, including how long it takes to process a message and the acceptable amount of latency (queue delay).”

That is why we need something like backlog per instance – divide that number by the fleet’s running capacity, which for an Auto Scaling group is the number of instances in the “InService” state, to get the backlog per instance. In our exact case we do not have EC2 instances, but number of ECS tasks – though principle is still the same.

The most important thing you need to do, is to determine what your application can accept in terms of latency. Here is a good explanation of the current process:

As an example, let’s say that you currently have an Auto Scaling group with 10 instances and the number of visible messages in the queue (

ApproximateNumberOfMessages) is 1500. If the average processing time is 0.1 seconds for each message and the longest acceptable latency is 10 seconds, then the acceptable backlog per instance is 10 / 0.1, which equals 100 messages. This means that 100 is the target value for your target tracking policy. When the backlog per instance reaches the target value, a scale-out event will happen. Because the backlog per instance is already 150 messages (1500 messages / 10 instances), your group scales out, and it scales out by five instances to maintain proportion to the target value.

Please read attentively several times, and be sure you understand what it means – as that is the method that you need to apply to calculate target value properly at production.

Let me provide rest of the files from autoscaling terraform module (small notice – outputs.tf in that case is empty):

# main.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = ">=5.32.1"

}

}

required_version = ">=0.14.5"

}# variables.tf

variable "ecs_cluster_name" {

type = string

}

variable "ecs_service_name" {

type = string

}

variable "app_name" {

type = string

}

variable "auto_scale_min_capacity" {

type = number

}

variable "auto_scale_max_capacity" {

type = number

}

variable "sqs_target_value" {

type = number

}

variable "sqs_queue_name" {

type = string

}# variables-env.tf

variable "account_id" {

type = string

description = "AWS Account ID"

}

variable "env" {

type = string

description = "Environment name"

}

variable "project" {

type = string

description = "Project name"

}

variable "region" {

type = string

description = "AWS Region"

}Finally, here is the example of implementation:

module "auto_scaling_fargate_predefined_sqs" {

source = "../../modules/autoscaling-fargate-predefined-sqs"

account_id = var.account_id

env = var.env

project = var.project

region = var.region

auto_scale_min_capacity = 1

auto_scale_max_capacity = 3

sqs_target_value = 10

sqs_queue_name = "dev_sample_queue"

ecs_cluster_name = module.fargate.ecs_cluster_name

ecs_service_name = module.fargate.ecs_service_name

app_name = module.fargate.app_name

}Thank you for the attention.

P.S. If you want to pass all material at once in fast and convenient way, with detailed explanations, then welcome to my course: “AWS Fargate DevOps: Autoscaling with Terraform at practice”, here you may find coupon with discount.