Hi, terraform fans.

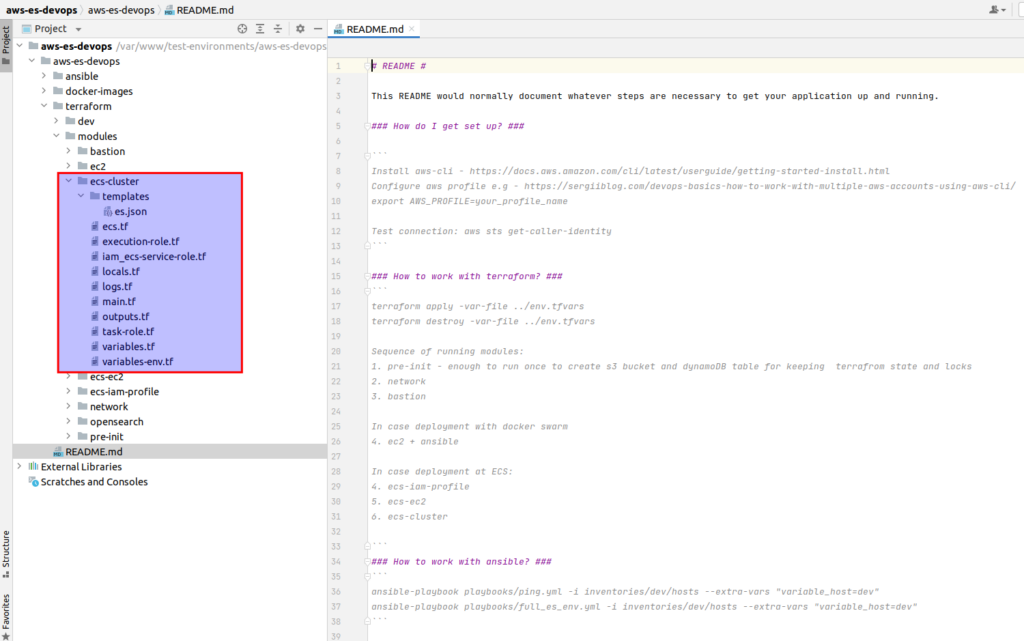

At previous article we deployed EC2 instanced for our ECS cluster. That is the last article related to the theme “How to deploy an Elasticsearch cluster at AWS using ECS and terraform”. It is time to investigate ecs cluster terraform module. As always we will start from physical file’s structure:

Let’s open an ecs.tf file, where the main logic is located:

resource "aws_ecs_cluster" "es-cluster" {

name = "es-cluster"

}

data "template_file" "es" {

template = file("${path.module}/templates/es.json")

vars = {

docker_image_url_es = var.docker_image_url_es

region = var.region

}

}

resource "aws_ecs_task_definition" "es" {

family = "es"

container_definitions = data.template_file.es.rendered

volume {

name = "esdata"

host_path = "/usr/share/elasticsearch/data"

}

volume {

name = "esconfig"

host_path = "/usr/share/elasticsearch/config/elasticsearch.yml"

}

requires_compatibilities = ["EC2"]

cpu = "1024"

memory = "1024"

# role that the Amazon ECS container agent and the Docker daemon can assume

execution_role_arn = aws_iam_role.ec2_ecs_execution_role.arn

# role that allows your Amazon ECS container task to make calls to other AWS services

task_role_arn = aws_iam_role.ecs_task_role.arn

}

resource "aws_ecs_service" "es-cluster" {

name = "es-cluster-service"

cluster = aws_ecs_cluster.es-cluster.id

task_definition = aws_ecs_task_definition.es.arn

desired_count = 3

placement_constraints {

type = "distinctInstance"

}

}So at 1st we define our ecs cluster. Please, remember that cluster name should be compatible with ECS_CLUSTER parameter at ecs config located at ec2 instances (look at userdata.sh.tpl from previous article). Without that magic glue – nothing would be working. Then we define a template file, where we will keep the task definition. We will return to it a little bit later. Below we have the task definition by itself. Here we are saying that we want to use the template already mentioned above. Then we have an essential section related to volumes.

We are going to keep our Elasticsearch data at host, so here we define the hostpath. ECS will mount that volume for the docker container. The similar case is with our yml Elasticsearch config. Current host path resources are created at our user data (look at userdata.sh.tpl from previous article). Then we have to use the requires_compatibilities option to inform ECS that we are using EC2 launch type. Here we also can apply memory and cpu constraints for our task.

There are two essential IAM roles that you need to understand to work with AWS ECS. AWS differentiates between a task execution role, which is a general role that grants permissions to start the containers defined in a task, and a task role that grants permissions to the actual application once the container is started. Let’s dive deeper into what both roles.

So, 1st one is execution_role_arn. This role is not used by the task itself. As you can notice, I left here the comment: “role that the Amazon ECS container agent and the Docker daemon can assume”. So, in fact, it is used by the ECS agent and container runtime environment to prepare the containers to run. Now let’s open execution-role.tf:

resource "aws_iam_role" "ec2_ecs_execution_role" {

name = "ec2-ecs-execution-role"

path = "/"

assume_role_policy = data.aws_iam_policy_document.ec2_ecs_execution_policy.json

}

data "aws_iam_policy_document" "ec2_ecs_execution_policy" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["ecs-tasks.amazonaws.com"]

}

}

}

resource "aws_iam_role_policy_attachment" "ec2_ecs_execution_role_attachment" {

role = aws_iam_role.ec2_ecs_execution_role.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy"

}Here we allow assuming action and then we tell what exactly we allow to assume – in that case we allow access to ECS tasks service. Then there is predefined AWS role which is called AmazonECSTaskExecutionRolePolicy, which describes what exactly do we allow. In short, it allows us to communicate with AWS docker registry and gives us possibility to expose logging information, and in our case it is enough (you can get more information around that at my online course at udemy).

The 2d one role is task_role_arn. It grants additional AWS permissions required by your application once the container is started. Let’s open task-role.tf where we define all things related to current role:

resource "aws_iam_role" "ecs_task_role" {

name = "ecs-task-role"

path = "/"

assume_role_policy = data.aws_iam_policy_document.ecs_task_policy.json

}

data "aws_iam_policy_document" "ecs_task_policy" {

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["ecs-tasks.amazonaws.com"]

}

}

}

resource "aws_iam_policy" "ec2_es_plugin_describe_policy" {

name = "ec2-es-plugin-describe-policy"

policy = <<EOF

{

"Statement": [

{

"Action": [

"ec2:DescribeInstances"

],

"Effect": "Allow",

"Resource": [

"*"

]

}

],

"Version": "2012-10-17"

}

EOF

}

resource "aws_iam_role_policy_attachment" "ec2_es_describe_policy_attachment" {

role = aws_iam_role.ecs_task_role.name

policy_arn = aws_iam_policy.ec2_es_plugin_describe_policy.arn

}

resource "aws_iam_role_policy_attachment" "ecs_task_role_s3_attachment" {

role = aws_iam_role.ecs_task_role.name

policy_arn = "arn:aws:iam::aws:policy/AmazonS3FullAccess"

}Here you can notice familiar for you assume part, and then there is the policy that required for ec2 es plugin + we allow S3 access for making snapshots. For learning purposes it is OK, but for production – it is too broad permissions – better create some narrowed access only for some concrete S3 bucket where do you plan to save your Elasticsearch snapshots. I am providing it here only to show you that you need to provide permissions for the S3 Elasticsearch plugin – otherwise it would not be working. Generally I am not going to cover s3 Elasticsearch plugin in details – you may read more about it at official es documentation

Ok, so suppose roles issues can be considered to be closed. Let’s go to the next block within our ecs.tf file – it is the definition of ecs service by itself. So, we pass here cluster, task definition, desired count.

And now, attention, please, essential thing – placement_constraints – distinctInstance. What does it mean? It means that we want to have one running es container at every instance. You have to remember that we are keeping Elasticsearch data at instance storage. We don’t want to have the case when 2 Elasticsearch containers will try to write data at the same time. It is even hard to imagine what would happen then.

And that is almost all. The only thing which we have to examine is task definition at templates/es.json:

[

{

"name": "es-node",

"image": "${docker_image_url_es}",

"memory": 1024,

"cpu": 1024,

"resourceRequirements": null,

"essential": true,

"portMappings": [

{

"hostPort": 9200,

"containerPort": 9200,

"protocol": "tcp"

},

{

"hostPort": 9300,

"containerPort": 9300,

"protocol": "tcp"

}

],

"environment": [

{

"name": "ES_JAVA_OPTS",

"value": "-Xms512m -Xmx512m"

},

{

"name": "REGION",

"value": "${region}"

}

],

"environmentFiles": [],

"secrets": null,

"mountPoints": [

{

"sourceVolume": "esdata",

"containerPath": "/usr/share/elasticsearch/data/",

"readOnly": false

},

{

"sourceVolume": "esconfig",

"containerPath": "/usr/share/elasticsearch/config/elasticsearch.yml",

"readOnly": false

}

],

"volumesFrom": null,

"hostname": null,

"user": null,

"workingDirectory": null,

"extraHosts": null,

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "ec2-ecs-es",

"awslogs-region": "${region}",

"awslogs-stream-prefix": "ec2-es-log-stream"

}

},

"ulimits": [

{

"name": "nofile",

"softLimit": 65536,

"hardLimit": 65536

}

],

"dockerLabels": null,

"dependsOn": null,

"repositoryCredentials": null

}

]If you are interested in details what all that stuff means – then, please visit my course at udemy. Sorry, but it would take too much text to describe it at one article. OK, great, let’s finally apply our module. Implementation is rather simple, the only variable that we have to pass is image url.

terraform {

backend "s3" {

bucket = "terraform-state-aws-es-devops"

dynamodb_table = "terraform-state-aws-es-devops"

encrypt = true

key = "dev-cluster-ecs.tfstate"

region = "eu-central-1"

}

}

data "terraform_remote_state" "network" {

backend = "s3"

config = {

bucket = "terraform-state-aws-es-devops"

key = "dev-network.tfstate"

region = var.region

}

}

provider "aws" {

allowed_account_ids = [var.account_id]

region = var.region

}

module "ecs-cluster" {

source = "../../modules/ecs-cluster"

account_id = var.account_id

env = var.env

project = var.project

region = var.region

docker_image_url_es = "004571937517.dkr.ecr.eu-central-1.amazonaws.com/udemy-aws-es-node:latest"

}And here we are – my congratulations – now you know how to deploy high available Elasticsearch cluster at AWS with ECS using terrafom. If you are interesting at details, e,g what is going on under the hood – how ECS agent brings Elasticsearch containers, how to debug issues related to Elasticsearch cluster at ECS cluster. Or maybe you would like to know how all it looks like at AWS console side after applying all terraform modules, which AWS pitfalls are waiting for you if you are going to use current solution basing at my own commercial experience – then welcome to my course at udemy. As the reader of that blog you are also getting possibility to use coupon for the best possible low price.