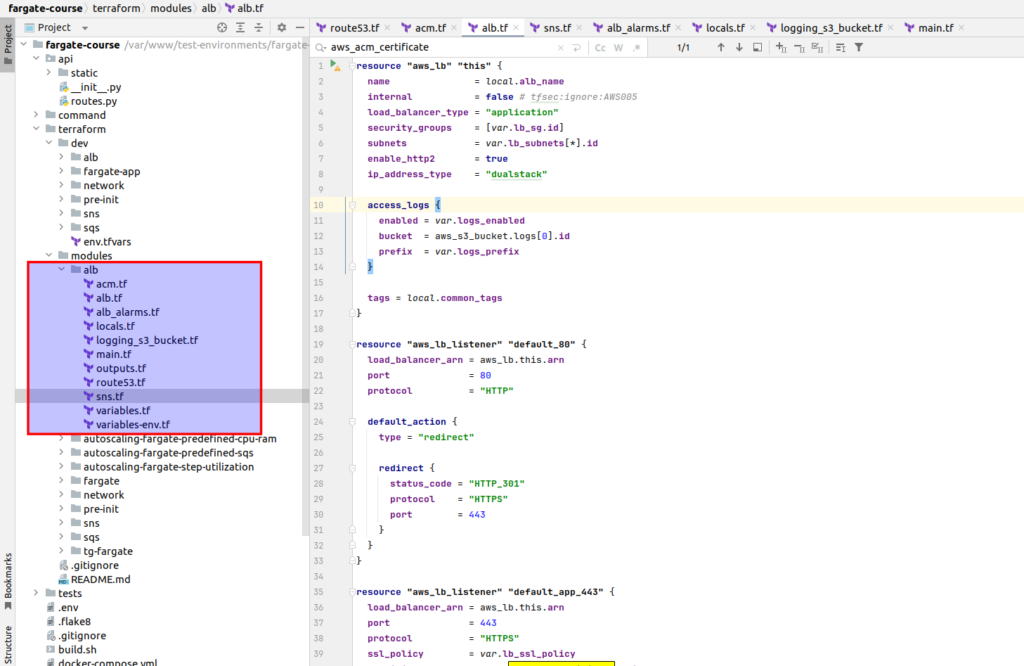

Hi, and welcome to the 2d part of detailed tutorial at how to deploy web application at AWS Fargate using terraform. At 1st part we created all required stuff related with AWS network. At that one part, we will concentrate at AWS ALB terraform realization. Here is module files physical structure:

Let’s start from defining aws acm certificate resource, that would be used at ALB listener:

# acm.tf

data "aws_acm_certificate" "main" {

domain = var.main_domain

statuses = ["ISSUED"]

most_recent = true

}Below you may find the main terrafrom ALB code realization, where we use network state information as variables to define subnet and security group. We also define 2 listeners:

- at port 80 – where all traffic is redirected at SSL connection

- at port 443, where we assigning SSL certificate.

Just as a reminder from 1st part: I assume that you have bought domain and delegated it to AWS Route 53, and you also generated certificate to it, using AWS certificate manager (the video how to do it is available for free at my Udemy course, look at Section 3, Lecture 8: Applying terraform – Part 3: ALB terrafrom module and AWS Certificate Manager)

# alb.tf

resource "aws_lb" "this" {

name = local.alb_name

internal = false # tfsec:ignore:AWS005

load_balancer_type = "application"

security_groups = [var.lb_sg.id]

subnets = var.lb_subnets[*].id

enable_http2 = true

ip_address_type = "dualstack"

access_logs {

enabled = var.logs_enabled

bucket = aws_s3_bucket.logs[0].id

prefix = var.logs_prefix

}

tags = local.common_tags

}

resource "aws_lb_listener" "default_80" {

load_balancer_arn = aws_lb.this.arn

port = 80

protocol = "HTTP"

default_action {

type = "redirect"

redirect {

status_code = "HTTP_301"

protocol = "HTTPS"

port = 443

}

}

}

resource "aws_lb_listener" "default_app_443" {

load_balancer_arn = aws_lb.this.arn

port = 443

protocol = "HTTPS"

ssl_policy = var.lb_ssl_policy

certificate_arn = data.aws_acm_certificate.main.arn

default_action {

type = "fixed-response"

fixed_response {

content_type = "text/plain"

message_body = "Access denied"

status_code = "403"

}

}

}From best practices, it would be good to attach ALB logging at AWS S3, here is code related with according logic:

# logging_s3_bucket.tf

resource "aws_s3_bucket" "logs" {

count = var.logs_bucket == null ? 0 : 1

bucket = var.logs_bucket

acl = "private" # tfsec:ignore:AWS002

server_side_encryption_configuration {

rule {

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

lifecycle_rule {

id = "delete"

enabled = true

expiration {

days = var.logs_expiration

}

}

force_destroy = var.logs_bucket_force_destroy

tags = local.common_tags

}

data "aws_iam_policy_document" "alb_logs_s3" {

count = var.logs_bucket == null ? 0 : 1

statement {

sid = "AlbS301"

actions = ["s3:PutObject"]

resources = ["${aws_s3_bucket.logs[0].arn}/${var.logs_prefix}/AWSLogs/${var.account_id}/*"]

principals {

identifiers = ["arn:aws:iam::${local.lb_account_id}:root"]

type = "AWS"

}

}

statement {

sid = "AlbS302"

actions = ["s3:PutObject"]

resources = ["${aws_s3_bucket.logs[0].arn}/${var.logs_prefix}/AWSLogs/${var.account_id}/*"]

principals {

identifiers = ["delivery.logs.amazonaws.com"]

type = "Service"

}

condition {

test = "StringEquals"

values = ["bucket-owner-full-control"]

variable = "s3:x-amz-acl"

}

}

statement {

sid = "AlbS303"

actions = ["s3:GetBucketAcl"]

resources = [aws_s3_bucket.logs[0].arn]

principals {

identifiers = ["delivery.logs.amazonaws.com"]

type = "Service"

}

}

}

resource "aws_s3_bucket_policy" "alb_logs" {

count = var.logs_bucket == null ? 0 : 1

bucket = aws_s3_bucket.logs[0].id

policy = data.aws_iam_policy_document.alb_logs_s3[0].json

}And it is always good idea to attach some CloudWatch alarms:

# sns.tf

data "aws_sns_topic" "alarm_topic" {

name = var.alarm_sns_topic_name

}# alb-alarms.tf

resource "aws_cloudwatch_metric_alarm" "alb_5xx" {

alarm_name = format("%s-%s", local.name_prefix, "dev-alb-5xx")

comparison_operator = "GreaterThanOrEqualToThreshold"

evaluation_periods = "2"

metric_name = "HTTPCode_ELB_5XX_Count"

namespace = "AWS/ApplicationELB"

period = "300"

statistic = "Sum"

threshold = var.alb_5xx_threshold

datapoints_to_alarm = "1"

dimensions = {

LoadBalancer = aws_lb.this.arn_suffix

}

treat_missing_data = "notBreaching"

alarm_actions = [data.aws_sns_topic.alarm_topic.arn]

}

resource "aws_cloudwatch_metric_alarm" "target_5xx" {

alarm_name = format("%s-%s", local.name_prefix, "dev-target-5xx")

comparison_operator = "GreaterThanOrEqualToThreshold"

evaluation_periods = "2"

metric_name = "HTTPCode_Target_5XX_Count"

namespace = "AWS/ApplicationELB"

period = "300"

statistic = "Sum"

threshold = var.target_5xx_threshold

datapoints_to_alarm = "1"

dimensions = {

LoadBalancer = aws_lb.this.arn_suffix

}

treat_missing_data = "notBreaching"

alarm_actions = [data.aws_sns_topic.alarm_topic.arn]

}To forward traffic at ALB from route53 we need to create A record that assign our sub domain at ALB DNS name:

# route53.tf

data "aws_route53_zone" "alias" {

for_each = local.alias_zones

name = each.key

}

locals {

alias_zones = toset([

for alias in var.create_aliases :

alias["zone"]

])

alias_fqdns_with_zones = {

for alias in var.create_aliases : format("%s.%s", alias["name"], alias["zone"]) => alias["zone"]

}

}

resource "aws_route53_record" "alias" {

for_each = local.alias_fqdns_with_zones

zone_id = data.aws_route53_zone.alias[each.value].zone_id

name = each.key

type = "A"

alias {

name = aws_lb.this.dns_name

zone_id = aws_lb.this.zone_id

evaluate_target_health = false

}

}Finally, below, I am adding additional terraform code related with variables and locals:

# locals.tf

locals {

name_prefix = format("%s-%s", var.project, var.env)

alb_name = format("%s-%s", local.name_prefix, "test")

common_tags = {

Env = var.env

ManagedBy = "terraform"

Project = var.project

}

lb_account_id = lookup({

"us-east-1" = "127311923021"

"us-east-2" = "033677994240"

"us-west-1" = "027434742980"

"us-west-2" = "797873946194"

"af-south-1" = "098369216593"

"ca-central-1" = "985666609251"

"eu-central-1" = "054676820928"

"eu-west-1" = "156460612806"

"eu-west-2 " = "652711504416"

"eu-south-1" = "635631232127"

"eu-west-3" = "009996457667"

"eu-north-1" = "897822967062"

},

var.region

)

}# variables.tf

variable "main_domain" {

description = "The main for ACM cert"

type = string

}

variable "vpc" {}

variable "lb_sg" {

description = "The ALB security group"

}

variable "lb_subnets" {}

variable "logs_enabled" {

description = "ALB app logging enabled"

type = bool

}

variable "logs_prefix" {

description = "The ALB app logs prefix"

type = string

}

variable "logs_bucket" {

type = string

description = "ALB Logs bucket name"

default = null

}

variable "logs_expiration" {

type = number

description = "ALB Logs expiration (S3)"

}

variable "logs_bucket_force_destroy" {

type = bool

default = false

description = "Force terraform destruction of the ALB Logs bucket?"

}

variable "lb_ssl_policy" {

description = "The ALB ssl policy"

type = string

}

variable "create_aliases" {

type = list(map(string))

description = "List of DNS Aliases to create pointing at the ALB"

}

variable "alarm_sns_topic_name" {

type = string

}

variable "alb_5xx_threshold" {

type = number

default = 20

}

variable "target_5xx_threshold" {

type = number

default = 20

}# variables-env.tf

variable "account_id" {

type = string

description = "AWS Account ID"

}

variable "env" {

type = string

description = "Environment name"

}

variable "project" {

type = string

description = "Project name"

}

variable "region" {

type = string

description = "AWS Region"

}# main.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.21"

}

}

required_version = "~> 1.6"

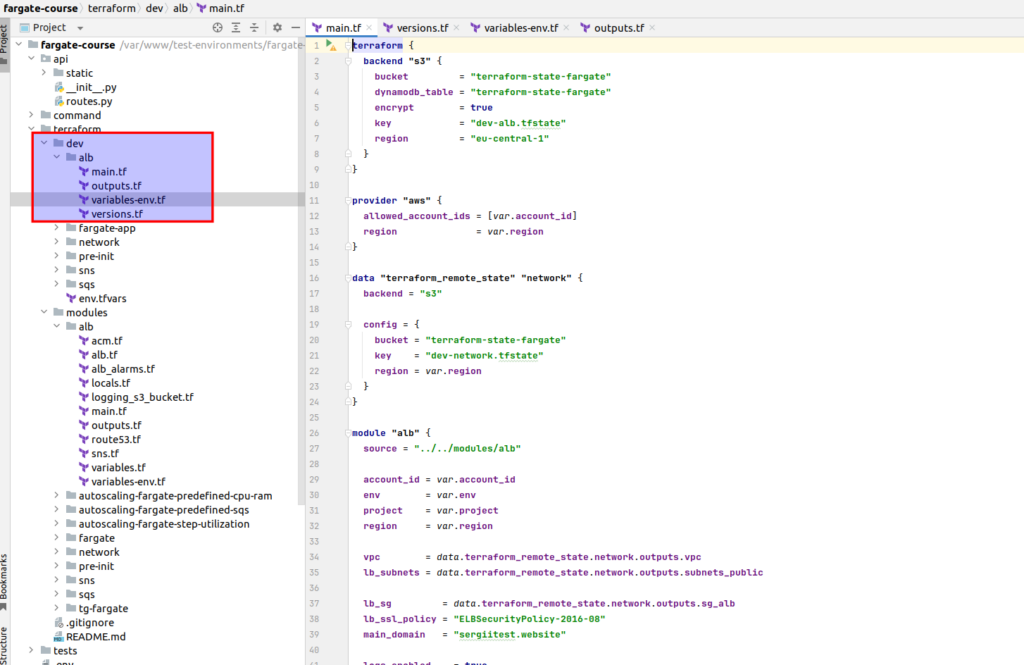

}Now, more pleasant part – ALB terrafom module realization :

# main.tf

terraform {

backend "s3" {

bucket = "terraform-state-fargate"

dynamodb_table = "terraform-state-fargate"

encrypt = true

key = "dev-alb.tfstate"

region = "eu-central-1"

}

}

provider "aws" {

allowed_account_ids = [var.account_id]

region = var.region

}

data "terraform_remote_state" "network" {

backend = "s3"

config = {

bucket = "terraform-state-fargate"

key = "dev-network.tfstate"

region = var.region

}

}

module "alb" {

source = "../../modules/alb"

account_id = var.account_id

env = var.env

project = var.project

region = var.region

vpc = data.terraform_remote_state.network.outputs.vpc

lb_subnets = data.terraform_remote_state.network.outputs.subnets_public

lb_sg = data.terraform_remote_state.network.outputs.sg_alb

lb_ssl_policy = "ELBSecurityPolicy-2016-08"

main_domain = "sergiitest.website"

logs_enabled = true

logs_prefix = "dev-flask"

logs_bucket = "dev-lb-flask-logs"

logs_expiration = 90

create_aliases = [

{

name = "flask"

zone = "sergiitest.website"

}

]

alarm_sns_topic_name = "udemy-dev-alerts"

}We also need to save some ALB reference information at our state, as it would be required at Fargate terraform part:

# outputs.tf

output "alb_dns_name" {

value = module.alb.alb_dns_name

}

output "listener_443_arn" {

value = module.alb.listener_443_arn

}

output "arn_suffix" {

value = module.alb.arn_suffix

}Finally, there are files related with fixing provider version and env variables:

# versions.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "5.21.0"

}

}

required_version = "1.6.1"

}# variables-env.tf

variable "account_id" {

type = string

description = "AWS Account ID"

}

variable "env" {

type = string

description = "Environment name"

}

variable "project" {

type = string

description = "Project name"

}

variable "region" {

type = string

description = "AWS Region"

}OK, great, take my congratulations – you already know how to deploy AWS network and ALB using terraform. So, we are ready to go further with AWS Fargate by itself, what would be done at next part. If you do not want to miss next part, subscribe to the newsletter. If you want to pass all material at once in fast and convenient way, with detailed explanations, then welcome to my course: “AWS Fargate DevOps: Autoscaling with Terraform at practice”, here you may find coupon with discount.