Hi, Elasticsearch fans.

At current, 2d article, we will continue deep investigation of Elasticsearch cluster (the 1st part can be found here). At current article we will speak in details about:

- what is shard

- how to choose properly the number of shards

- how to choose properly the size of the shard

- how number and size of the shards influence at performance

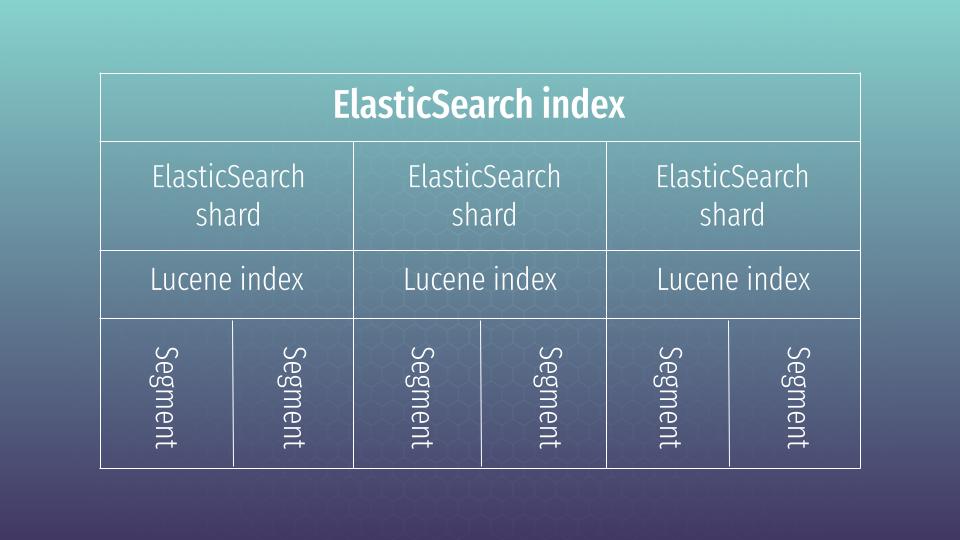

So, let’s go. As you remember from the 1st part, shard – that is the logical and physical division of the index. But in fact, that is the Lucene index. You can say what, how can it be and what is that Lucene? Apache Lucene is a free and open-source search engine software library. If you will read more about Apache Lucene and dig rather deep inside of Elasticsearch Java source code, then, SURPRISE, you will find, that Elasticsearch is a super powered Lucene manager with some additional functions and a super good REST API. And all that makes that tool so popular at the market. Here small scheme that shows how Lucene is used by Elasticsearch engine from higher perspective:

You may ask me why I am talking about so low level things? This is because Lucene has to search through all the segments in sequence, not in parallel. Having a little number of segments improves search performances. You may say – ok then we can have only several segments. Not to the end – little amount of segments, with a lot of data, transforms automatically in a big size for every segment. And segments are constantly rearranged during delete/update operations. Big segment size can negatively affect the cluster’s ability to recover from failure or you simply can meet with disk space problems during segments merging. As a result choosing the right number of shards is important, the same as choosing storage space. So, how to choose it in the right way?

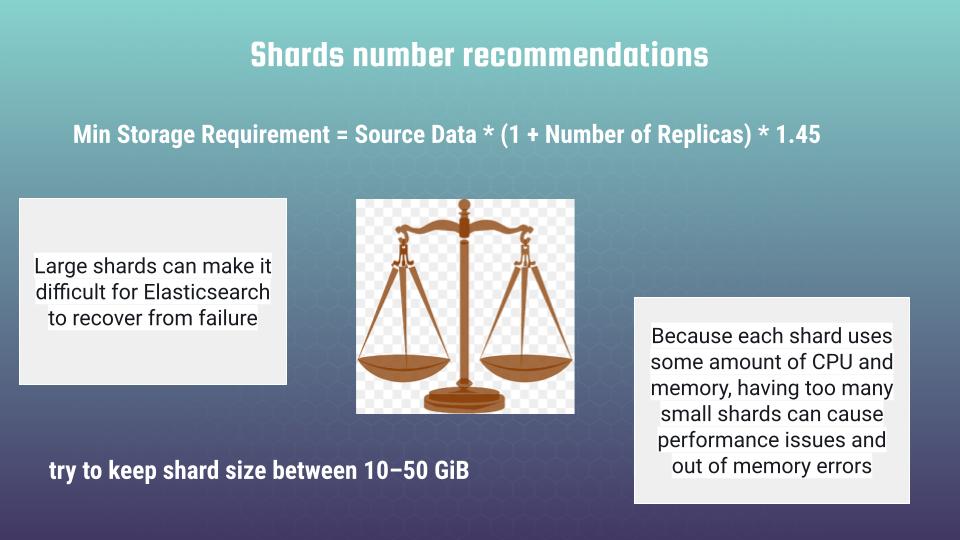

At first you have to know your storage requirements. There is a formula that can help to calculate it in a correct way:

Min Storage Requirement = Source Data * (1 + Number of Replicas) * 1.45So here we have:

- Source Data – that is the number of Gb our index takes at disk. We can easily check it by running next command at console: curl -XGET http://localhost:9200/_cat/indices?v 172.27.73.50:9200

- Next we have to multiply it at number of replicas we want to have

- And in the end there is a magic coefficient 1.45. For sure, next question appears: where does it come from? 🙂 According to Elasticsearch official documentation, that is experimental value that reflects different overheads. Mostly, it is storage overhead caused by merging Lucene shards overhead, what we just mentioned above 😉

Insufficient storage space is one of the most common causes of Elasticsearch cluster instability. From my commercial experience appears that it is even better to add 20-30% at “Min Storage Requirement”, after calcultaing it according to above mentioned formula. So, seems we deal with the storage size. What about shard size?

Shard size should be calculated taking into consideration 2 opposite factors:

- Large shards can make it difficult for Elasticsearch to recover from failure

- Because each shard uses some amount of CPU and memory, having too many small shards can cause performance issues and out of memory errors

Shards should be small enough that the underlying server can handle them, but not so small that they place needless strain on the hardware. There is a “GOLDEN” rule, that appears from practice – try to keep shard size between 10–50 Gb. Now, when we know the recommended shard size, we can calculate the number of shards for our index. As you can guess, special formula exists for that 🙂

Approximate Number of Primary Shards = (Source Data + Room to Grow) * (1 + Indexing Overhead) / Desired Shard SizeRemember that we can set primary shards size only before creating index and it can’t be changed after. You may change the number of replicas dynamically – but not the number of primary shards. That is why we have to take into consideration the index growth (Room to Grow in formula) – if we don’t want to recreate the index constantly and reindex our whole data :). In case of Indexing Overhead, you can take it as 10%, which gives as 1.1.coefficient. Let’s take some example. Imagine, that our source data takes 30 Gb, we assume + 20 Gb growth in closest year. Let’s take also that we want to take shard size to be 20Gb (personally I always choose that value), It gives us the next result:

Approximate Number of Primary Shards = (30 + 20) * 1.1 / 20 = 2.75 -> that gives us 3 shard

So, while having the number of shards you may choose the number of instances. In our example we can easily start with 3 node cluster solutions. Though there is still one essential thing – hardware. How to choose it properly? Let’s discuss it at next article. Hope to see you soon.

P.S.

New articles would be added gradually, so if you are interested at current topic, please visit that page regularly or subscribe to my newsletter. But if you are not ready to wait – then I propose you to view all that material at my on-line courses at udemy. Below are the links to the courses, where you can find a lot of useful and, first of all, practical information about Elasticsearch: