Hi, Elasticsearch fans. As probably all of you know, indexing of documents at Elasticsearch can appear to be a rather often operation. Changing fields mapping or document relationships, changing the number of shards and many other things force us to make reindexing of whole data. And when we have great amounts of data – then it appears to be a great problem. At that article we will speak how to perform indexing in the most efficient and fast way.

First of all – use bulk requests. That will yield much better performance than single-document index requests. How does it work? We are gathering some package of documents and then ask Elasticsearch to index it at once. First try to index 100 documents at once, then 200, then 400 – until you will notice that indexing is slowing down, rather than becoming faster. Let’s assume that you found that threshold around 400. In that case leave some buffer to not overload the node and stay with 300. To perform bulk operations you may use Bulk Elasticsearch API or use commit+flush operation while using programming languages, e.g at PHP, Python, Java

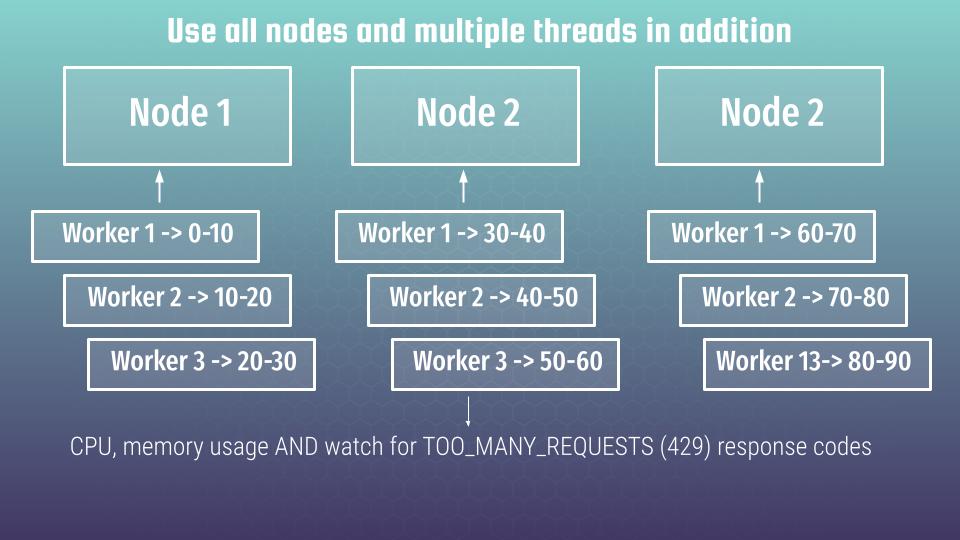

At second – use all nodes and multiple threads in addition. In most cases the narrow place is not Elasticsearch, which has rather high index rate – but the processing of data by itself. E.g taking according data from different storages, making some transformations around it and so on. Use resources effectively – spread the work between many workers and many nodes at the same time. Let’s assume that you have 3 node cluster and you need to index 90 mln documents. You can apply such a scheme as an example.

For example, you can assign the first 10 million documents to the first worker. At the same time you are having running the second worker that will process, next 10 million package and so on, and so on. Moreover, you may run all workers simultaneously at all nodes. In such a way Elasticsearch will be able to index all documents at once and then will replicate according data between the nodes. The main question, which appears while using current approach, is: How to choose the amount of workers?

Simply test it at practice – monitor your CPU, memory usage and watch for another essential parameter, which is called TOO_MANY_REQUESTS (429) response codes. And if you will notice such a response code, like 429, or got it constantly at your logs – then it means, that you reach some “up” threshold. And that’s the way you can get the maximum number of workers you can run. Try to experiment – try to change different batches, try to run as many workers as you can and use all your CPU and memory resources. And in most cases, Elasticsearch would be able to process all your request. In such a way you can reach the high optimal speed of indexing.

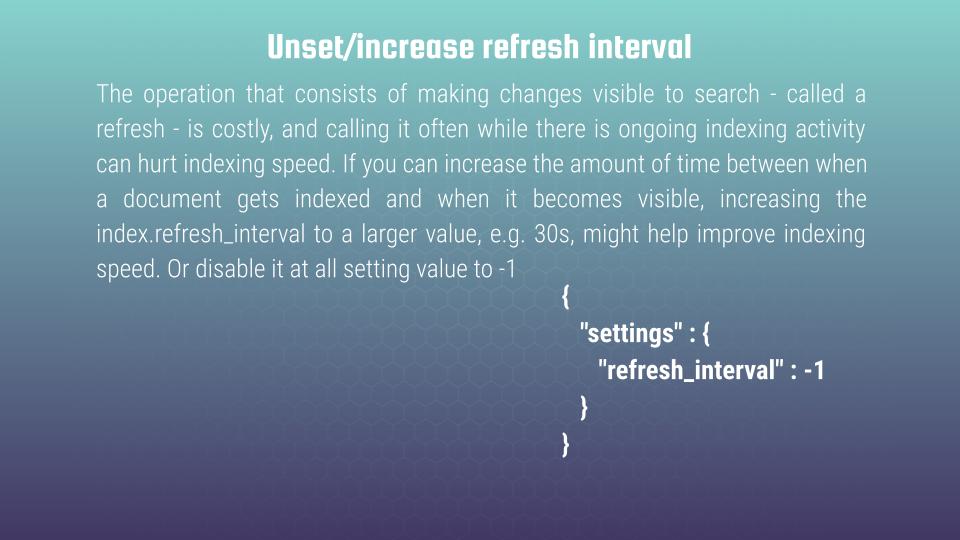

Another essential, thing, that can speed the whole indexing process, is the refresh interval. The operation that consists of making changes visible to search – called a refresh – is costly, and calling it often while there is ongoing indexing activity can hurt indexing speed. If you can increase the amount of time between when a document gets indexed and when it becomes visible, increasing the index.refresh_interval to a larger value, e.g. 30s, or disable it at all, by setting value to -1. At my practice, I’m only using the second option 🙂

There are some other tricks that you can use to speed up your indexing process:

- change the number of replicas. If you are sure your cluster is stable, you may set index.number_of_replicas parameter to zero value, and then, after indexing, you can back to your initial values – that trick helps a lot

- try to use auto generated ID to your documents (if you don’t need to preserve some ID consistency with your own scheme). It allows Elasticsearch to not check if some concrete ID exist and simply use auto generated value, that works much more faster.

- use faster hardware – you may scale up Elasticsearch nodes vertically for indexing time to get more memory+CPU, that will help you to run more workers and to finish your indexing faster.

- give memory to file system cache – don’t give all memory to java virtual machine heap (JVM). Better to stay with 50% only for JVM and leave all other four file system cache – it is especially essential in case you’re providing indexing.

Thank you for you attention

P.S.

Below are the links to the courses, where you can find a lot of useful and, first of all, practical information about Elasticsearch: